|

|

||

|---|---|---|

| asserts | ||

| en_docs | ||

| webmagic-avalon | ||

| webmagic-core | ||

| webmagic-extension | ||

| webmagic-samples | ||

| webmagic-saxon | ||

| webmagic-scripts | ||

| webmagic-selenium | ||

| zh_docs | ||

| .gitignore | ||

| .gitmodules | ||

| .travis.yml | ||

| README.md | ||

| pom.xml | ||

| release-note.md | ||

| user-manual.md | ||

| webmagic-avalon.md | ||

README.md

A scalable crawler framework. It covers the whole lifecycle of crawler: downloading, url management, content extraction and persistent. It can simplify the development of a specific crawler.

Features:

- Simple core with high flexibility.

- Simple API for html extracting.

- Annotation with POJO to customize a crawler, no configuration.

- Multi-thread and Distribution support.

- Easy to be integrated.

Install:

Add dependencies to your pom.xml:

<dependency>

<groupId>us.codecraft</groupId>

<artifactId>webmagic-core</artifactId>

<version>0.4.3</version>

</dependency>

<dependency>

<groupId>us.codecraft</groupId>

<artifactId>webmagic-extension</artifactId>

<version>0.4.3</version>

</dependency>

WebMagic use slf4j with slf4j-log4j12 implementation. If you customized your slf4j implementation, please exclude slf4j-log4j12.

<exclusions>

<exclusion>

<groupId>org.slf4j</groupId>

<artifactId>slf4j-log4j12</artifactId>

</exclusion>

</exclusions>

Get Started:

First crawler:

Write a class implements PageProcessor:

public class OschinaBlogPageProcesser implements PageProcessor {

private Site site = Site.me().setDomain("my.oschina.net");

@Override

public void process(Page page) {

List<String> links = page.getHtml().links().regex("http://my\\.oschina\\.net/flashsword/blog/\\d+").all();

page.addTargetRequests(links);

page.putField("title", page.getHtml().xpath("//div[@class='BlogEntity']/div[@class='BlogTitle']/h1").toString());

page.putField("content", page.getHtml().$("div.content").toString());

page.putField("tags",page.getHtml().xpath("//div[@class='BlogTags']/a/text()").all());

}

@Override

public Site getSite() {

return site;

}

public static void main(String[] args) {

Spider.create(new OschinaBlogPageProcesser()).addUrl("http://my.oschina.net/flashsword/blog")

.addPipeline(new ConsolePipeline()).run();

}

}

-

page.addTargetRequests(links)Add urls for crawling.

You can also use annotation way:

@TargetUrl("http://my.oschina.net/flashsword/blog/\\d+")

public class OschinaBlog {

@ExtractBy("//title")

private String title;

@ExtractBy(value = "div.BlogContent",type = ExtractBy.Type.Css)

private String content;

@ExtractBy(value = "//div[@class='BlogTags']/a/text()", multi = true)

private List<String> tags;

public static void main(String[] args) {

OOSpider.create(

Site.me(),

new ConsolePageModelPipeline(), OschinaBlog.class).addUrl("http://my.oschina.net/flashsword/blog").run();

}

}

Docs and samples:

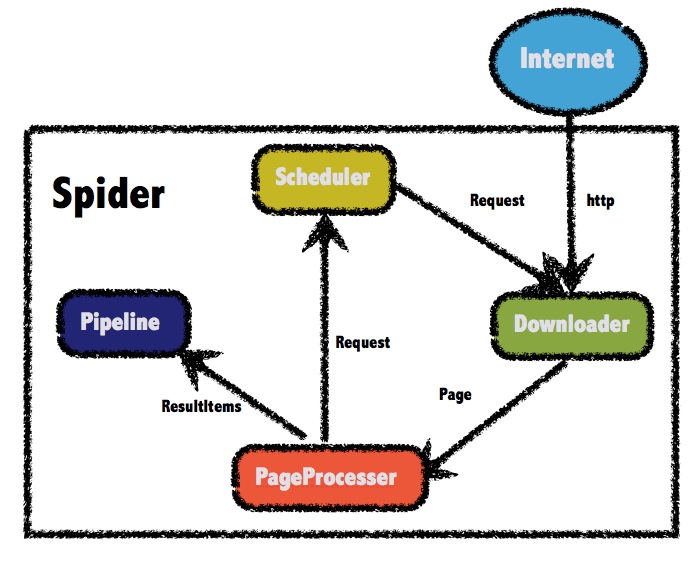

The architecture of webmagic (refered to Scrapy)

Javadocs: http://code4craft.github.io/webmagic/docs/en/

There are some samples in webmagic-samples package.

Lisence:

Lisenced under Apache 2.0 lisence

Contributors:

Thanks these people for commiting source code, reporting bugs or suggesting for new feature:

- yuany

- yxssfxwzy

- linkerlin

- d0ngw

- xuchaoo

- supermicah

- SimpleExpress

- aruanruan

- l1z2g9

- zhegexiaohuozi

- ywooer

- yyw258520

- perfecking

- lidongyang

Thanks:

To write webmagic, I refered to the projects below :

-

Scrapy

A crawler framework in Python.

-

Spiderman

Another crawler framework in Java.