1. remove lazy init of Html 2. rename strings to sourceTexts for better meaning 3. make getSourceTexts abstract and DO NOT always store strings 4. instead store parsed elements of document in HtmlNode |

||

|---|---|---|

| assets | ||

| en_docs | ||

| webmagic-avalon | ||

| webmagic-core | ||

| webmagic-extension | ||

| webmagic-samples | ||

| webmagic-saxon | ||

| webmagic-scripts | ||

| webmagic-selenium | ||

| zh_docs | ||

| .gitignore | ||

| .travis.yml | ||

| README.md | ||

| pom.xml | ||

| release-note.md | ||

| user-manual.md | ||

| webmagic-avalon.md | ||

README.md

A scalable crawler framework. It covers the whole lifecycle of crawler: downloading, url management, content extraction and persistent. It can simplify the development of a specific crawler.

Features:

- Simple core with high flexibility.

- Simple API for html extracting.

- Annotation with POJO to customize a crawler, no configuration.

- Multi-thread and Distribution support.

- Easy to be integrated.

Install:

Add dependencies to your pom.xml:

<dependency>

<groupId>us.codecraft</groupId>

<artifactId>webmagic-core</artifactId>

<version>0.5.1</version>

</dependency>

<dependency>

<groupId>us.codecraft</groupId>

<artifactId>webmagic-extension</artifactId>

<version>0.5.1</version>

</dependency>

WebMagic use slf4j with slf4j-log4j12 implementation. If you customized your slf4j implementation, please exclude slf4j-log4j12.

<exclusions>

<exclusion>

<groupId>org.slf4j</groupId>

<artifactId>slf4j-log4j12</artifactId>

</exclusion>

</exclusions>

Get Started:

First crawler:

Write a class implements PageProcessor. For example, I wrote a crawler of github repository infomation.

public class GithubRepoPageProcessor implements PageProcessor {

private Site site = Site.me().setRetryTimes(3).setSleepTime(1000);

@Override

public void process(Page page) {

page.addTargetRequests(page.getHtml().links().regex("(https://github\\.com/\\w+/\\w+)").all());

page.putField("author", page.getUrl().regex("https://github\\.com/(\\w+)/.*").toString());

page.putField("name", page.getHtml().xpath("//h1[@class='entry-title public']/strong/a/text()").toString());

if (page.getResultItems().get("name")==null){

//skip this page

page.setSkip(true);

}

page.putField("readme", page.getHtml().xpath("//div[@id='readme']/tidyText()"));

}

@Override

public Site getSite() {

return site;

}

public static void main(String[] args) {

Spider.create(new GithubRepoPageProcessor()).addUrl("https://github.com/code4craft").thread(5).run();

}

}

-

page.addTargetRequests(links)Add urls for crawling.

You can also use annotation way:

@TargetUrl("https://github.com/\\w+/\\w+")

@HelpUrl("https://github.com/\\w+")

public class GithubRepo {

@ExtractBy(value = "//h1[@class='entry-title public']/strong/a/text()", notNull = true)

private String name;

@ExtractByUrl("https://github\\.com/(\\w+)/.*")

private String author;

@ExtractBy("//div[@id='readme']/tidyText()")

private String readme;

public static void main(String[] args) {

OOSpider.create(Site.me().setSleepTime(1000)

, new ConsolePageModelPipeline(), GithubRepo.class)

.addUrl("https://github.com/code4craft").thread(5).run();

}

}

Docs and samples:

Documents: http://webmagic.io/docs/

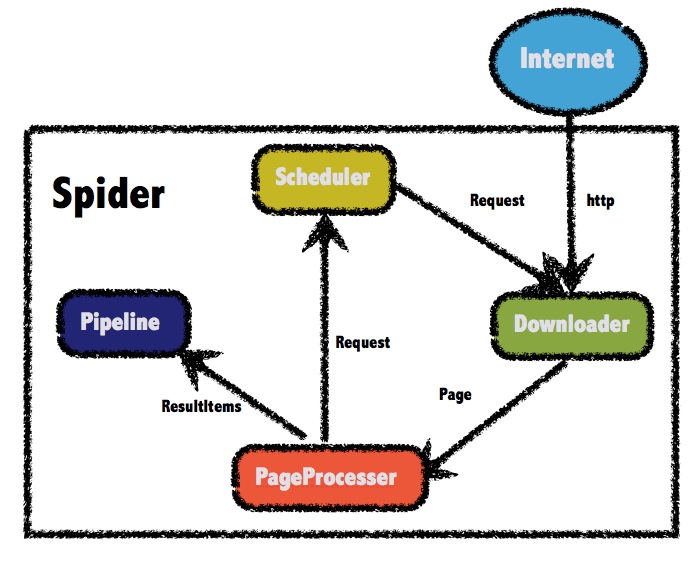

The architecture of webmagic (refered to Scrapy)

Javadocs: http://code4craft.github.io/webmagic/docs/en/

There are some samples in webmagic-samples package.

Lisence:

Lisenced under Apache 2.0 lisence

Contributors:

Thanks these people for commiting source code, reporting bugs or suggesting for new feature:

- ccliangbo

- yuany

- yxssfxwzy

- linkerlin

- d0ngw

- xuchaoo

- supermicah

- SimpleExpress

- aruanruan

- l1z2g9

- zhegexiaohuozi

- ywooer

- yyw258520

- perfecking

- lidongyang

- seveniu

- sebastian1118

- codev777

Thanks:

To write webmagic, I refered to the projects below :

-

Scrapy

A crawler framework in Python.

-

Spiderman

Another crawler framework in Java.

Mail-list:

https://groups.google.com/forum/#!forum/webmagic-java

http://list.qq.com/cgi-bin/qf_invite?id=023a01f505246785f77c5a5a9aff4e57ab20fcdde871e988

QQ Group: 373225642